- [15 points] Recall that 1 block is 1 disk sector; and 1 disk sector is 4KB (4096 bytes). Let the schema of a table be:

create table midterm_grades (

netid CHAR(8) character set latin1,

q1_score TINYINT,

q1_comments varchar(100) character set utf8,

q2_score TINYINT,

q2_comments varchar(100) character set latin1,

q3_score TINYINT,

q3_comments varchar(100) character set latin1,

q4_score TINYINT,

q4_comments varchar(100) character set utf8,

extra_credit TINYINT

)

Let’s say that the average comment length is 20 characters long, and that each record header contains 12 bytes of important record information.

Answer the following questions:

a. How many bytes is does the average record consume? (show your work)

b. About 1120 students have taken my databases midterm at Notre Dame (total). Given that records may not span more than one disk sector. How many sectors are needed to store all the midterm records?

2. [15 points] Notice that Q1 did not contain any primary key. If we add a primary key to the netid column, then we will also need to store the index on disk too. Recalculate your answer to Q1-b to take into account a primary key using a B+ Tree on the netid column.

You can use $d$ of either 2 or 170 (or really any number in between so long as you state it clearly and show your work).

3. [30 points] UTF8 encoding is a variable length encoding. This means that sometimes it uses 1 byte, sometimes it uses 2, 3, or 4 bytes to represent a character. How does it know that a character is 1 or 2 or 3 or 4 bytes?

UTF-8 distinguishes this using a unary encoding. That is, if the bits of a character start with 0, then there is only 1 byte being read for this character. 0xxxxxxx represents a 1-byte character (basically the first 127 ascii characters). If the first bits of the character start with 110xxxxx, then there will be two bytes, 1110xxxx means 3 bytes, and 11110xxx means 4 bytes. See this table for example.

| Bytes | 1st byte | 2nd byte | 3rd byte | 4th byte |

|---|---|---|---|---|

| 1 | 0xxxxxxx | |||

| 2 | 110xxxxx | 10xxxxxx | ||

| 3 | 1110xxxx | 10xxxxxx | 10xxxxxx | |

| 4 | 11110xxx | 10xxxxxx | 10xxxxxx | 10xxxxxx |

Hence, the capital ‘A’, which is 65 in decimal/ASCII is represented as 01000001 encoded as ‘A’ in UTF-8.

The winky emoji: 😉 is 128,521 in decimal which is 00000001 11110110 00001001 in binary, which is encoded into UTF8 as 11110000 10011111 10011000 10001001 (with only 21 encoded bits)

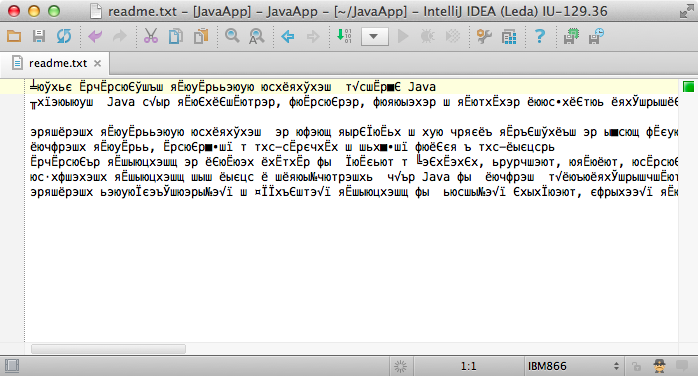

“Encoding errors” happen when a file encoded in one format is decoded in another format:

Let’s work to understand what’s happening here.

a. Let’s say that the string ‘Hi’ was encoded with ASCII.

where H is represented in decimal as 72 and

where i is represented in decimal as 105

How would it appear if decoded in UTF8?

b. Let’s say that the string `关税’ (which is Chinese-Mandarin guānshuì which translates to “tariff” in English is encoded with UTF-8

where 关 is represented in decimal as 20851 and

where 税 is represented in decimal as 31246

How would it appear if decoded with Latin-encoding (i.e., ASCII)? (show your work)

4. [20 points] Let $d$=2. Place the data from the model of the table below into a B+ tree indexing on the following table:

| model | power | weight | price |

|---|---|---|---|

| 1001 | 280 | 40.00 | 21.14 |

| 1002 | 210 | 20.40 | 90.95 |

| 1003 | 142 | 20.40 | 45.78 |

| 1004 | 280 | 40.00 | 60.49 |

| 1005 | 320 | 20.40 | 60.30 |

| 1006 | 320 | 40.00 | 10.49 |

| 1007 | 220 | 40.00 | 52.10 |

| 1008 | 220 | 81.90 | 77.00 |

| 1009 | 220 | 40.00 | 60.50 |

| 1010 | 280 | 81.90 | 77.00 |

| 1011 | 280 | 81.90 | 95.90 |

| 1012 | 280 | 40.00 | 61.49 |

| 1013 | 306 | 20.50 | 52.99 |

5. [0 points] Get to work on Stage 3 Demo!