(Much of the material adapted from notes from Easterbrook and Neves)

Research Checklist

- Pick a topic

- Identify the research question(s)

- Check the literature

- Identify your philosophical stance

- Identify appropriate theories

- Choose the method(s)

- Design the study

- Unit of analysis?

- Target population?

- Sampling technique?

- Data collection techniques?

- Metrics for key variables?

- Handle confounding factors

- Critically appraise the design for threats to validity

- Get IRB approval

- Informed consent?

- Benefits outweigh risks?

- Recruit subjects / field sites

- Conduct the study

- Analyze the data

- Write up the results and publish them

- Iterate

Step 1: Pick a topic

I really can’t help you here. Find something that you are interested in, identify a gap in the knowledge.

Step 2: Identify the Research Questions

Exploratory

Existence:

Does X exist?

Description & Classification

What is X like?

What are its properties?

How can it be categorized?

How can we measure it?

What are its components?

Descriptive-Comparative

How does X differ from Y?

Baserate

Frequency and Distribution

How often does X occur?

What is an average amount of X?

Descriptive-Process

How does X normally work?

By what process does X happen?

What are the steps as X evolves?

Correlation

Relationship

Are X and Y related?

Do occurrences of X correlate with occurrences of Y?

Casual Relationship

Causality

Does X cause Y?

Does X prevent Y?

What causes X?

What effect does X have on Y?

Causality-‐Comparative

Does X cause more Y than does Z?

Is X better at preventing Y than is Z?

Does X cause more Y than does Z under one condition but not others?

Design

Design

What is an effective way to achieve X?

How can we improve X?

Step 3: Check the literature

Ask yourself: where does these research questions belong:

Step 4: Identify your philosophical stance

What will you accept as truth? There are many philosophical stances here:

Positivist

Knowledge is objective

“Causes determine effects/ outcomes”

Reductionist: study complex things by breaking down to simpler ones

Prefer quantitative approaches

Verifying (or Falsifying) theories

Critical Theorist

Research is a political act

Knowledge is created to empower groups/individuals

Choose what to research based on who it will help

Prefer participatory approaches

Seeking change in society

Constructivist

Knowledge is socially constructed

Truth is relative to context

Theoretical terms are open to interpretation

Prefer qualitative approaches

Generating “local” theories

Pragmatist

Research is problem-centered

“All forms of inquiry are biased”

Truth is what works at the time

Prefer multiple methods / multiple perspectives

seeking practical solutions to problems

Step 5: Identify Relevant Theories

Theories guide us. Help us generalize.

We can identify what theories are applicable and use them to frame our approach. Statement of theory is usually the first sentence of the research paper.

Step 6: Choose the Method

Exploratory

Used to build new theories where we don’t have any yet

Descriptive

Describes sequence of events and underlying mechanisms

Causal

Determines whether there are causal relationship between phenomena

Explanatory

Adjudicates between competing explanations (theories)

What is your way of knowing?

- Lab Experiments

- Rational Reconstructions

- Exemplars

- Benchmarks

- Simulations

- In the wild methods

- Quasi-Experiments

- Case Studies

- Survey Research

- Ethnographies

- Action Research

Step 7: Design Your Study

Step 7.1: Unit of Analysis

Defines what phenomena you will analyze

Choice depends on the primary research questions

Examples:

- Individuals or Groups of people

- Queries

- Systems

- Data instances (as in machine learning)

- Robot

- A Pair of Programmers

Step 7.2: Target Population

This determines the scope of applicability of your results

If you don’t define the target population, then nobody will know whether your results apply to anything at all.

Let’s say you have a Unit of Analysis: Developers using Agile Programming

What is your Target Population?

- All software developers in the world?

- All developers who use agile methods?

- All developers in USA Software Industry?

- All developers in Small Companies in Indiana?

- All students taking SE courses at Notre Dame?

Step 7.3: Sampling technique

- Used to select representative set from a population

- Simple Random Sampling – choose every kth element

- Stratified Random Sampling – identify strata and sample each

- Clustered Random Sampling – choose a representative subpopulation and sample it

- Purposive Sampling – choose the parts you think are relevant without worrying about statistical issues.

- Sample Size is important: this is a balance between cost of data collection/analysis and required significance

- Process:

- Decide what data should be collected

- Determine the population

- Choose type of sample

- Choose sample size

Types of Purposive Sampling

- Typical Case

- Identify typical, normal, average case

Extreme or Deviant Case - E.g outstanding success/notable failures, exotic events, crises.

- Identify typical, normal, average case

- Critical Case

- if it’s true of this one case it’s likely to be true of all other cases.

- Intensity

- Information-rich examples that clearly show the phenomenon (but not extreme)

- Maximum Variation

- choose a wide range of variation on dimensions of interest

- Homogeneous

- Instance has little internal variability – simplifies analysis

- Snowball or Chain

- Select cases that should lead to identification of further good cases

- Criteria

- All cases that meet some criteria

- Confirming or Disconfirming

- Exceptions, variations on initial cases

- Opportunistic

- Rare opportunity where access is normally hard/impossible

- Politically important cases

- Attracts attention to the study

- Convenience Sampling

- Cases that are easy/cheap to study

- Beware – this often reduces credibility

Step 7.4: Data collection techniques

Direct Techniques

- Brainstorming / Focus Groups

- Interviews

- Questionnaires

- Conceptual Modeling

- Work Diaries

- Think-aloud Sessions

- Shadowing and Observation

- Participant Observation

Indirect Techniques

- Instrumented Systems

- Fly on the wall

Independent Techniques

- Analysis of work databases

- Analysis of usage logs

- Documentation Analysis

Step 7.5: Metrics for key variables

| Type | Meaning | Operations |

|---|---|---|

| Nominal | Unordered classification of objects | = |

| Ordinal | Ranking of objects into ordered categories | =, <, > |

| Interval | Differences between points on a scale is meaningful | =, <, >, -, $\mu$ |

| Ratio | Ratio between points on a scale is meaningful | =, <, >, -, $\mu$, ÷ |

| Absolute | No units are necessary, scale is just the scale | =, <, >, -, $\mu$, ÷ |

Step 7.6: Handle confounding factors

Think about what could go wrong.

You need to be able to understand if:

- Your results follow clearly from the phenomena you observed, or

- Your results were caused by phenomena that you failed to observe

Identify all (likely) confounding variables. Control for them or ignore them with justification.

Step 8: Critically appraise the design for threats to validity

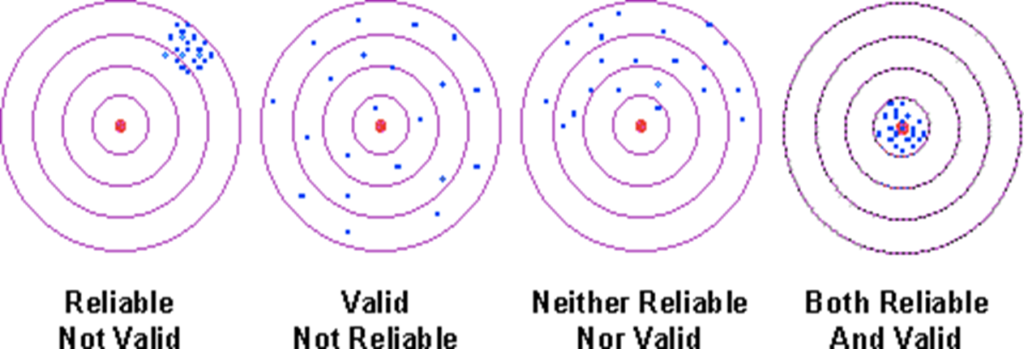

Reliability: Does the study get consistent results?

Validity: Does the study get true results?

Construct Validity

- Are we measuring the construct we intended to measure?

- Did we translate these constructs correctly into observable measures?

- Did the metrics we use have suitable discriminatory power?

- Using things that are easy to measure instead of the intended concept

- Wrong scale; insufficient discriminatory power

Internal Validity

- Do the results really follow from the data?

- Have we properly eliminated any confounding variables?

- Confounding variables: Familiarity and learning

- Unmeasured variables: time to complete task, quality of result, etc.

External Validity

- Are the findings generalizable beyond the immediate study?

- Do the results support the claims of generalizability?

- Task representativeness

- Subject representativeness

Empirical Reliability

- If the study was repeated, would we get the same results?

- Did we eliminate all researcher biases?

- Researcher bias: subjects know what outcome you prefer

Are we measuring what we intend to measure?

Seek help when in doubt.